Last update : June 1, 2021

The present contribution is related to my recent thread in the Coqui discussion forum.

Before telling the story of my experience with Text-To-Speech (TTS) synthesis, I would like to show the current state-of-art of open-source machine-learning (ML) technologies, by presenting sound samples synthesized with english, french and german TTS models, created by Coqui.ai, a young start-up launched in March 2021 on the ruins of the Mozilla speech projects.

The test utterance that I used for the synthesis is the first sentence of the fable The North Wind and the Sun. This fable is made famous by its use in phonetic descriptions as an illustration of spoken language in the Handbook of the International Phonetic Association and the Journal of the International Phonetic Association.

Here are the three test sentences in english, french and german :

The North Wind and the Sun were disputing which was the stronger when a traveller came along wrapped in a warm cloak.

La bise et le soleil se disputaient, chacun assurant qu’il était le plus fort, quand ils ont vu un voyageur qui s’avançait, enveloppé dans son manteau.

Einst stritten sich Nordwind und Sonne, wer von ihnen beiden wohl der Stärkere wäre, als ein Wanderer, der in einen warmen Mantel gehüllt war, des Weges daherkam.

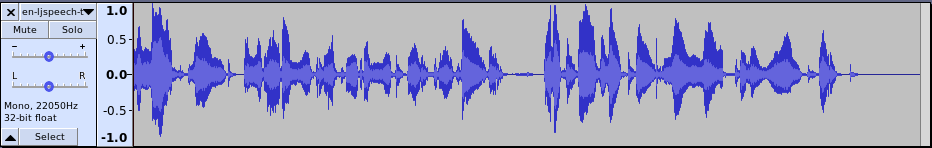

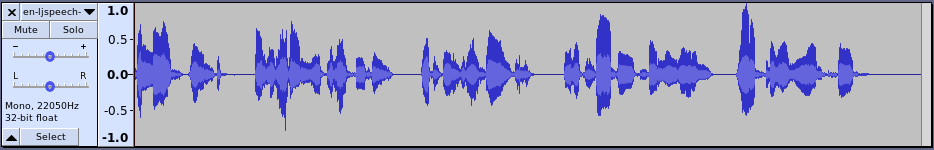

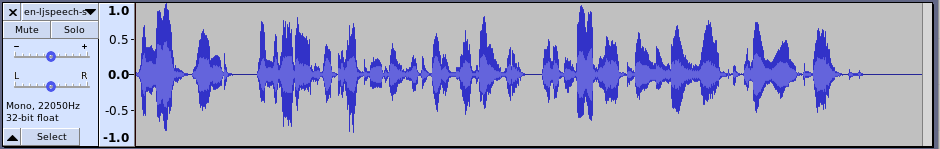

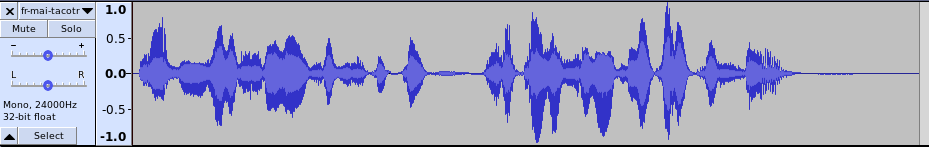

Here are the synthesized sound samples and waveforms of several released Coqui-TTS models :

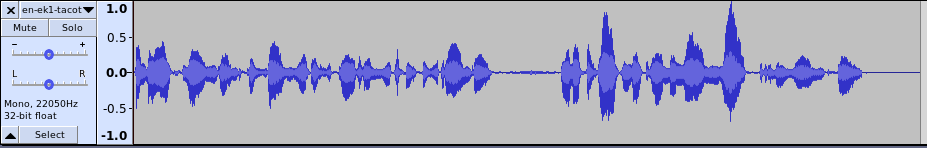

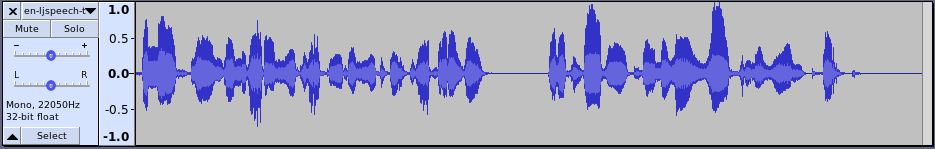

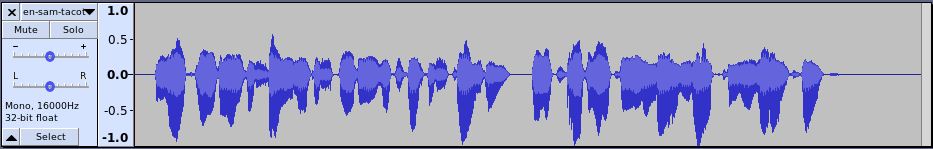

1. Model : tacotron2 ; Language : english ; Dataset : EK1 ; Wav-Size : 296,5 KB

2. Model : tacotron2-DDC ; Language : english ; Dataset : LJSpeech ; Wav-size : 328,3 KB

3. Model : tacotron2-DDC ; Language : english ; Dataset : SAM ; Wav-size : 256,6 KB

4. Model : tacotron-DCA ; Language : english ; Dataset : LJSpeech ; Wav-size : 303,7 KB

5. Model : glow-tts ; Language : english ; Dataset : LJSpeech ; Wav-size : 361,0 KB

6. Model : speedy-speech-wn ; Language : english ; Dataset : LJSpeech ; Wav-size : 338,0 KB

7. Model : tacotron-DDC ; Language : french ; Dataset : MAI ; Wav-size : 237,6 KB (problem)

8. Model : tacotron-DCA; Language : german ; Dataset : Thorsten ; Wav-size : 479,8 KB

My TTS story

Over the next decade, speech is expected to become the primary way people interact with devices — from phones and laptops to digital assistants. Today’s voice-enabled devices are inaccessible to most of the planet’s languages and accents. Currently, neither Amazon’s Alexa, Apple’s Siri, nor Google Home support luxembourgish, a West Germanic language that is spoken by about 600,000 people inside Luxembourg and in the border regions of the neighbour counries Belgium, France and Germany. The same is true for Africa. No single native african language is supported by the GAFAM companies.

I has always been interested in voice technologies. Last year I published a book about the history of speech synthesis, starting with the mechanical speaking heads in the middle Ages.

Next to the speaking heads the talking machines of Wolfgang von Kempelen and Josef Faber became famous. In the second half of the 19th century the first electromagnetic speech devices were designed. The pioneers were Joseph Henry, Graham Bell, Thomas Edison. Pedro the Voder, created by Homer Dudley, was exposed at the World Exhibition 1939 in New York.

Late 1940 Franklin S. Cooper designed the pattern-playback machine which converted spectrograms to speech. It was a sort of forerunner of the Tacotron.

At an MIT conference in 1956 Gunnar Fant and Walter Lawrence demonstrated their synthesizers OVE-I and P.A.T. in an interactive dialog session. Both equipments were controlled by several parameters to change the formants and features of the speech. The first commercial speech synthesizer was the electronic Bell speech kit launched early 1960. It was the last project developed by Homer Dudley.

Progressively transistors were replaced by integrated circuits. State of art of the speech synthesis at this time was the linear predictive coding (LPC). This technology was implemented in the integrated circuit TMC0281 developed by Texas Instruments for the famous toy Speak & Spell launched in June 1978.

In 1974 Richard Thomas Gagnon obtained a license for an electronic phoneme based synthesizer called VOTRAX. His employer, the company Federal Screw Works, produced these devices with great success. Ten years later, in 1984, this synthesizer was renamed Votalker and sold as PC card for the computers IBM PC, Apple II and Commodore 64. The same year Digital Equipement Corporation (DEC) launched an autonomous synthesizer with an RS-232 serial computer interface. It was based on the program KlatTalk developed by Dennis Klatt in 1982.

Dennis Klatt is considered as the father of the speech synthesis software. He recorded his own voice to extract the speech features for the program. The first synthesizer used by Stephen Hawkins spoked with the voice of Dennis Klatt.

The Klatt synthesizer became famous with the general public when Jonathan Duddington added this technology in his speech software for the Acorn Computers. He started the development of this tool, named Speak, in 1995. In 2006 Speak became eSpeak and was at that time a very popular open-source program running on all kind of operating systems. The last message by Jonathan Duddington on Internet was on April 16, 2015. At my knowledge nobody in the large eSpeak community knows what happened to Jonathan Duddington.

At the end of 2015, the coordination of the project has been entrusted to Reece Dunn by the community and a new GitHub repository with the name eSpeak-NG (new generation) was created. Because eSpeak-NG supports more than 100 different languages, the tool is commonly used today as a grapheme-to-phoneme conversion front-end for high-end TTS and STT engines.

The development of hight quality speech synthesizers began at the end of the 20th century. Edinburgh, Mons, Nagoya, Pittsburg and Saarbrucken were the capitals where the related technologies have been developed in universities and marketed by spin-offs or licensed to big companies. Every software engineer committed to the development of speech tools knows the big projects like CMU-Spinx, Festival, Festvox, Flite, FreeTTS, HTK, HTS, Kaldi, MaryTTS, MBROLA or SPTK.

MaryLux is the unique luxembourgish synthetic voice created until now. It was developed in 2014 with the MaryTTS technology.

Ten years ago the landscape of the speech technologies changed. Deep-machine learning became the new fetish. Amazon, Apple, AT&T, Baidu, Facebook, Google, IBM, Microsoft were the dominant players in the fields of artificial intelligence (AI), neural-networks (NN) and machine-learning (ML) to create TTS and STT models. The new ML-projects were named DeepVoice, GAN, Glow, Tacotron, WaveGrad etc. In the hardware domain of ML, NVIDIA became the new king.

In July 2017, Mozilla launched the project Common Voice to help make voice recognition open to everyone. The same year in November the initial release off the open source speech recognition ML-model DeepSpeech, using the common voice dataset, which was contributed to by nearly 20,000 people worldwide at this time, was described by Mozilla.

Early 2018 the GitHub repository Mozilla-TTS was created, but the first and unique version 0.0.9 was only released in January 2021. In August 11, 2020, Mitchell Baker, CEO of Mozilla, announced that the World, Internet and Mozilla will be changing and that Mozilla will be restructured to focus on Firefox in the future. In an internal message the Mozilla employees were informed that the changes also include a significant reduction of the workforce by approximately 250 people. This was the beginning of the end of Mozilla STT and Mozilla TTS.

This restructuration of Mozilla was probably also the start of the initiative Coqui.ai, dedicated to open speech technology and to serving as the hub where speech researchers, developers, and practitioners congregate. The start-up Coqui.ai was founded in March 2021 by four machine learning (ML) experts with a strong experience on the Mozilla deep-learning voice STT (speech-to-text) and TTS (text-to-speech) projects.

The founders of the start-up Coqui.ai are :

Kelly Davis worked at Mozilla in Germany from April 2015 to September 2020. The last three years he was the manager of the Mozilla Machine Learning Group. Before he worked as software engineer and research programmer at different companies and startup’s and he founded the startup forty.to in 2012. Kelly Davis has a BS from MIT and a PhD from the Rutgers University (1997).

Eren Gölge was senior research engineer at Mozilla Germany from January 2018 to February 2021. He has a MS from the Bilkent University (2014) and was PhD candidate up to mid-2017. During his studies he worked for different companies and he cofounded the startup 8bit.ai in 2014. He announced the creation of Coqui.ai on March 15, 2021 in the Mozilla discussion forum.

Josh Meyer has a BA from the Seton Hall University and a PhD in computational linguistics from the University of Arizona (2019). During his studies he worked as research assistant and consultant for different companies and projects, among them an internship at Mozilla in the San Francisco Bay Area. From January 2020 to April 2021 he worked as a Machine Learning Fellow at Mozilla on open voice technology projects in East Africa.

Reuben Morais has a technical degree in industrial informatics from CEFET-MG in Belo Horizonte (2011). Since 2010 he was a volunteer contributor at Mozilla and he worked for several companies. In 2015 he continued his studies at the Federal University (UMFG) in Belo Horizonte and obtained a BS in information systems in 2018. During his studies he continued to work as ML research engineer at Upwork Global Inc as contractor for Mozilla. Since July 2019 he works as senior research engineer for Mozilla.

Besides Coqui.ai there are some other great communities dealing with TTS and STT, for example Rhasspy, an open source, fully offline set of voice assistant services, created by Michael Hansen, alias synesthesiam. Another example is Microft AI, the open answer to Amazon Echo and Google Home, launched on Kickstarter in 2015.

I will eventually write a second book about speech synthesis to present the history of the open-source ML-projects realized by the communities of Microft.ai (Mimic), Rhasspy, Coqui.ai and others. Before starting this undertaking, I will fulfill my dream to develop a high quality luxembourgish speech synthesizer, with the tools and kind help of these communities. Perhaps I can even go further and create a multilingual (luxembourgish, german, french and english) and multispeaker model.

Rodange, June 1, 2021

Marco Barnig