Last update : May 18, 2013

A rolling ball clock is a clock which displays time by means of balls and rails. It was invented by Harley Mayenschein in the 1970s. He patented the design and founded Idle Tyme Corporation in 1978, which manufactured these clocks from solid hardwoods.The US Patent 4,077,198 was issued to Harley Mayenschein on March 7, 1978.

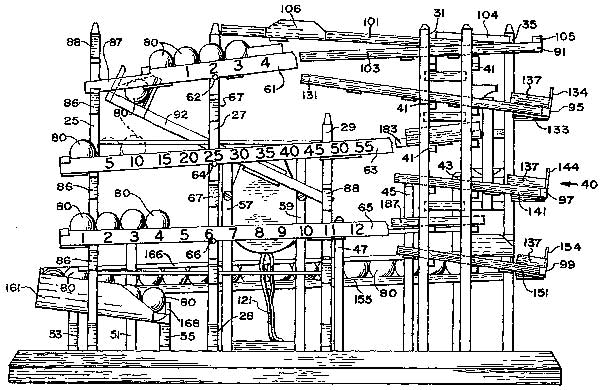

Figure of US Patent 4,077,198

The clock works by using steel balls to indicate the exact time. There are 3 main rails which are numbered for hours and minutes. The bottom rail represents the hours. The middle and upper rails are used to represent the minutes. An electric motor scoops up a ball every minute. Every five minutes, the top rail will dump and deposit a ball on the second rail. Every hour, the upper and middle rails dump and one ball is transferred to the bottom rail to increment the hours.

Original Rolling Ball Clock

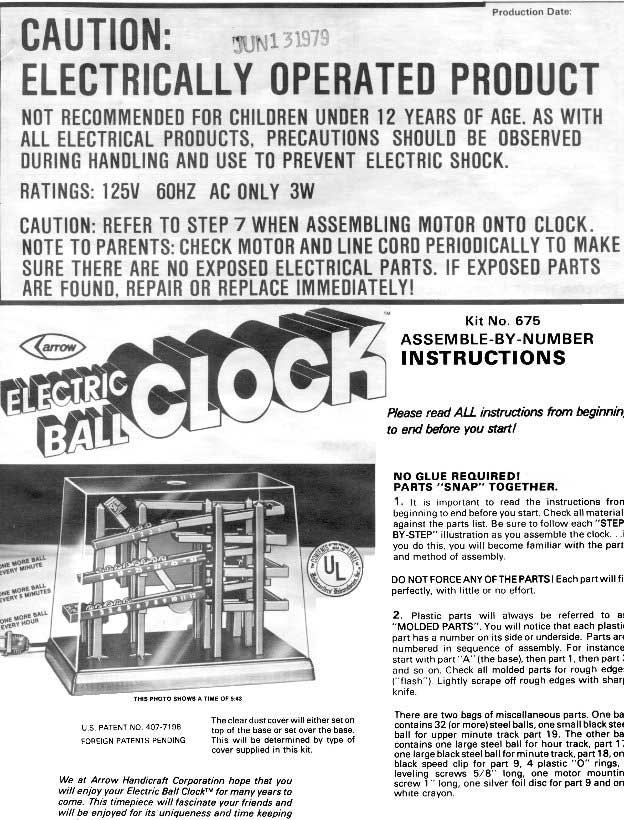

Mayenschein sold a license on the patent to Arrow Handicraft Corporation. The clocks were sold in kits to be assembled in one hour.

First page of the assembly instruction of the Arrow Ball Clock kit

Arrow Handicraft Corporation made different versions of the clock kit : classic, large, Deluxe, and a Domino kit. Mattel also made the ball clocks under License.

When Harley passed away in 1985 the family closed the business. Joe Mayenschein, Harley’s Son and primary clock maker, started the business again a few years ago. It is a limited production operation; about five clocks per week are made and shipped on a First Come, First Served basis. Three options to order a clock (four colors to choice : walnut, cherry, oak, black satin) are offered at prices between 253$ and 300$.

The current version of the Arrow Rolling Ball Clock comes from the company Can You Imagine and is now called Time Machine – Kinetic Display Clock. Assembly is no longer required. All you have to do is put the balls on the rails. The Time Machine now has a seconds wheel which lets you see exactly when a ball is about to get scooped up.

I received today a Time Machine as a Christmas gift and enjoy watching the 1:00 drop when all three rails dump their balls to the feed rail at the bottom.

Adam Bowman (aBowman), a web developer who lives in Hallowell, Maine, creates small gadgets that can be added to webpages or your desktop. One of these gadgets is a rolling ball clock.

Additional informations about rolling ball clocks and related topics are available at the following links :

- Time Machine rolling ball clock, by Daniel Rutter

- Stuart’s Rolling Ball Clock Page, by Stuart Singer

- Repairing a rolling ball clock, by Harry Siebert

- Ball Clocks – A Brief History of a Wonderful Clock, by Paul Sung

- Youtube Playlist of Rolling Ball Clock videos