Last update : August 21, 2013

It’s not easy to configure an AVC (H264) codec to create videos which will play on different devices and stream from various servers on the web, including Amazon S3 Cloudfront. Some basic informations about the different frame types of AVC are given at the post Smart editing of MPEG-4/H264 videos. The following list gives some informations about the common H264 parameters :

CABAC : stands for Context Adaptive Binary Arithmetic Coding. Improves encoding efficiency at the expense of playback/decoding efficiency. The default option is on, unless the encoded video is to be played back on devices with limited decoding power (for example iPod). CABAC is only supported by the main and higher profiles.

Trellis : Trellis is only available with CABAC on. It improves quality, while maintaining a small file size but it will increase conversion time slightly. The default value is on.

Encoding mode :

- Single Pass – Bitrate: encodes the video once with a set constant bitrate for each frame

- Single Pass – Quantizer: encodes the video with a set quantizer (higher quantizer => lower quality) for each frame. The default value is 26, the maximum value is 51.

- Single Pass – Quality: encodes the video with a set quality rating for each frame

- Two Pass: encodes the video twice (once to determine it’s properties, another to ensure the selected output file size is reached with maximum efficiency). This is the most common setting.

- Multi Pass: Same as Two Pass except for extra encoding passes to ensure even better quality/accurate file size. During multipass encoding, the video results of the first pass are saved into a log file. In a second step the encoding is done based on the logfile data.

Bit Rate : the average bitrate varies between 0 and 5000 Kbits/s; the default values are 800 Kbits/s for low quality, 1000 Kbit/s for medium quality and 1200 Kbits/s for high quality.

- Keyframe Boost : High values give better visual quality but also bigger file sizes. The default value for I-Frames is 40%. Values vary from 0 to 70.

- B-Frame reduction : these frames are responsible for the interpretation of motion in the video. This setting determines the reduction of quality in B-frames in favor of P-frames (predicted picture). The default vallue is 30%, the range varies from 0 to 60%. For cartoons higher values are recommended.

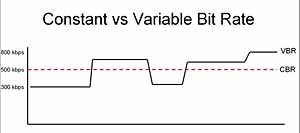

- Bitrate variability : This attribute indicates in how far the bitrate is allowed to vary in relation to what is set as target bitrate. A variable bitrate tells the encoder to vary bitrate as needed, based on the information in the frames. The default value is 60%, the range varies from 0 to 100%.

Quantization limits : these values are only used when the Single Pass – Quantizer encoding mode is selected.

- Min QP : Values vary from 0 to 50, the default value is 10.

- Max QP : Values vary from 0 to 51, the default value is 51

- Max QP step : Values vary from 0 to 50, the default value is 4.

Scene cuts : this option sets how H264 determines when a scene change has occurred and hence when a key frame is needed.

- Scene cut threshold : The default value is 40. A higher value will allow H264 to be less sensitive to scene changes. A lower value is recommended for dark videos.

- Min IDR frame interval : IDR means Instantaneous Decode Refresh, a parameter to indicate the amount of frames in between before the encoder can detect a new scene change. Setting this to high will result in not detecting enough scene changes. Setting it too low results in an unnecessary high bitrate. The range varies from 0 to 100.000, the default value is 25.

- Max IDR frame interval : Setting this too low results in too many keyframes and as such wasting bitrate for nothing. The range varies from 0 to 100.000, the default value is 250.

Partitions : During the encoding process, the encoder will break down the video into so-called Macroblocks. Then it will search for similar blocks in order to discard redundant data. The macroblocks can be subdivided into 16×8, 8×16, 8×8, 4×8, 8×4, and 4×4 partitions. The partition searches increase accuracy and compression efficiency. As a general rule, the more search types are performed, the better and stronger the compression will be while maintaining a high quality output.

- 8×8 transform : the 8×8 Adaptive DCT transform is a very powerful compression technique but it is not compatible with every device. It makes the video High Profile AVC.

- 8×8, 8×16 and 16×8 P-Frame search : This settings enables the 8×8 partitions on P-Frames and thus improves the visual quality of these frames.

- 8×8, 8×16 and 16×8 B-Frame search : This settings enables the 8×8 partitions on B-Frames and thus improves the visual quality of these frames.

- 4×4, 4×8 and 8×4 P-Frame search : This settings enables the 4×4 partitions on P-Frames, but usually the quality improvement will be negligible. Therefore this option is not worth the additional encoding time and thus can safely be turned off.

- 8×8 intra search : This settings enables the 8×8 partitions on I-Frames and thus improves the visual quality of these frames, but it requires the 8×8 Adaptive DCT Transform.

- 4×4 intra search : This settings enables the 4×4 partitions on I-Frames and thus improves the visual quality of these frames.

B-Frames :

- Use as a reference : alows a B-Frame to reference another B-Frame to provide better quality. Only useful when using more than 2 consecutive B-Frames.

- Adaptive : Turns on adaptive B-frames, which allows H264 to determine the number of B-frames to use. The default value is on. This option is only available when at least 1 B-frame has been set.

- Bidirectional ME : allows predictions based on motion both before and after the B-frames. Default value is on.

- Weighted bipredictional : allows B-Frames to be predicted more heavily from P-Frames which results in improved accuracy and therefore a more efficient encoding. Default value is on. This option is only available when at least 1 B-frame has been set.

- Direct B-Frame mode : temporal or spatial : The default value is temporal. The spatial mode handles better animated content.

- Max consecutive : the number of consecutive B-Frames. The values vary from 0 to 5, the default value is 3.

- Bias : Sets how much bias H264 should give the usage of B-frames (higher means more use of B-frames). Setting this to 100 is the equivalent of not selecting the “Adaptive” option.The default value is 0, possible values vary from -100 to +100.

Motion estimation :

- Partition decision : This controls the precision with which the motion in the video is estimated. Values range from 1 to 6. The default value is 5. A setting of 6 is even better but it strongly increases the amount of time needed for the conversion.

- Method : The better the method, the more efficient compression and high quality output. Hexagonal Search is the default setting. Uneven Multi-hexagon is meant for powerful computers, while Exhaustive search works only on super computers.

- Range : this field is disabled when you select Hexagonal Search. It only works with the powerful methods and it specifies the motion search in the pixels. The more pixels are examined, the more processor power is needed, but the better the outcome. The values vary from 0 to 64, the default value is 16.

- Max Ref Frames : This value indicates how many previous frames can be referenced by a P-frame or B-frame. The higher this value, the better the quality at the expense of speed. The values vary from 0 to 16, the default value is 0.

- Mixed references : offers the codec greater freedom to make references on a smaller scale. This option is only available when the Max Ref Frames value is greater than 1.

- Chroma ME : uses the color information in the video to estimate motions, which increases the visual quality. It is recommended to set this option on.

Misc. options :

- Threads : This sets the number of CPU threads to use in encoding. Default value is 1.

- Noise reduction : this setting depends if there is noise in the video images or not. Videos with noise appear grainyNoise Reduction filters out that noise and the more noise you have, the higher you need to set the value. Varies from 0 to 65535. Default value is 0.

- Deblocking filter : A deblocking filter is a video filter applied to blocks in decoded video to improve visual quality and prediction performance by smoothing the sharp edges which can form between macroblocks when block coding techniques are used. The strength (values from -6 to +6) and threshold (values from -6 to +6) of the filter are set. The default values are 0 and 0.

The AVC specifications define a number of different profiles specifying which compression features of H.264 are allowed or forbidden. In addition to the profiles, the AVC specifications also define a number of levels putting further restrictions on other properties of the video. These restrictions include the maximum resolution, the maximum bitrate, the maximum framerate. The common notation for Profiles and Levels is “Profile@Level”, for example Main@3.1.

The most common profiles for webstreaming are baseline (BP) and main (MP). Some differences in the features for these profiles are shown hereafter :

| Compression features |

Baseline Profile |

Main Profile |

| B-Frames |

no |

yes |

| CABAC |

no |

yes |

| FMO, ASO, RS |

yes |

no |

| PicAFF, MBAFF |

no |

yes |

The next table shows the maximum values for some common levels :

| Level Number |

Video bitrate |

Resolution & frame rate |

| 1.3 |

768 Kbit/s |

352×288 ; 30 fps |

| 2.2 |

4 Mbit/s |

352×576 ; 25 fps |

| 3.1 |

14 Mbit/s |

720×576 ; 25 fps |

| 4.0 |

20 Mbit/s |

1920×1080 ; 30 fps |

To display mp4 videos in all browsers and devices, especially in IE9, it’s necessary to include the right MIME type. If the videos are stored on Amazon AWS S3, the default content type is “application/octet-stream”. It’s easy to change the content type in video/mp4 in the properties-Metadata menus.

Further informations about AVC are available at the following websites :