J. C. R. Licklider

IRE Transactions on Human Factors in Electronics,

volume HFE-1, pages 4-11, March 1960

Summary

Man-computer symbiosis is an expected development in cooperative interaction between men and electronic computers. It will involve very close coupling between the human and the electronic members of the partnership. The main aims are 1) to let computers facilitate formulative thinking as they now facilitate the solution of formulated problems, and 2) to enable men and computers to cooperate in making decisions and controlling complex situations without inflexible dependence on predetermined programs. In the anticipated symbiotic partnership, men will set the goals, formulate the hypotheses, determine the criteria, and perform the evaluations. Computing machines will do the routinizable work that must be done to prepare the way for insights and decisions in technical and scientific thinking. Preliminary analyses indicate that the symbiotic partnership will perform intellectual operations much more effectively than man alone can perform them. Prerequisites for the achievement of the effective, cooperative association include developments in computer time sharing, in memory components, in memory organization, in programming languages, and in input and output equipment.

1 Introduction

1.1 Symbiosis

The fig tree is pollinated only by the insect Blastophaga grossorun. The larva of the insect lives in the ovary of the fig tree, and there it gets its food. The tree and the insect are thus heavily interdependent: the tree cannot reproduce wit bout the insect; the insect cannot eat wit bout the tree; together, they constitute not only a viable but a productive and thriving partnership. This cooperative “living together in intimate association, or even close union, of two dissimilar organisms” is called symbiosis [27].

“Man-computer symbiosis is a subclass of man-machine systems. There are many man-machine systems. At present, however, there are no man-computer symbioses. The purposes of this paper are to present the concept and, hopefully, to foster the development of man-computer symbiosis by analyzing some problems of interaction between men and computing machines, calling attention to applicable principles of man-machine engineering, and pointing out a few questions to which research answers are needed. The hope is that, in not too many years, human brains and computing machines will be coupled together very tightly, and that the resulting partnership will think as no human brain has ever thought and process data in a way not approached by the information-handling machines we know today.

1.2 Between “Mechanically Extended Man” and “Artificial Intelligence”

As a concept, man-computer symbiosis is different in an important way from what North [21] has called “mechanically extended man.” In the man-machine systems of the past, the human operator supplied the initiative, the direction, the integration, and the criterion. The mechanical parts of the systems were mere extensions, first of the human arm, then of the human eye. These systems certainly did not consist of “dissimilar organisms living together…” There was only one kind of organism-man-and the rest was there only to help him.

In one sense of course, any man-made system is intended to help man, to help a man or men outside the system. If we focus upon the human operator within the system, however, we see that, in some areas of technology, a fantastic change has taken place during the last few years. “Mechanical extension” has given way to replacement of men, to automation, and the men who remain are there more to help than to be helped. In some instances, particularly in large computer-centered information and control systems, the human operators are responsible mainly for functions that it proved infeasible to automate. Such systems (“humanly extended machines,” North might call them) are not symbiotic systems. They are “semi-automatic” systems, systems that started out to be fully automatic but fell short of the goal.

Man-computer symbiosis is probably not the ultimate paradigm for complex technological systems. It seems entirely possible that, in due course, electronic or chemical “machines” will outdo the human brain in most of the functions we now consider exclusively within its province. Even now, Gelernter’s IBM-704 program for proving theorems in plane geometry proceeds at about the same pace as Brooklyn high school students, and makes similar errors.[12] There are, in fact, several theorem-proving, problem-solving, chess-playing, and pattern-recognizing programs (too many for complete reference [1, 2, 5, 8, 11, 13, 17, 18, 19, 22, 23, 25]) capable of rivaling human intellectual performance in restricted areas; and Newell, Simon, and Shaw’s [20] “general problem solver” may remove some of the restrictions. In short, it seems worthwhile to avoid argument with (other) enthusiasts for artificial intelligence by conceding dominance in the distant future of cerebration to machines alone. There will nevertheless be a fairly long interim during which the main intellectual advances will be made by men and computers working together in intimate association. A multidisciplinary study group, examining future research and development problems of the Air Force, estimated that it would be 1980 before developments in artificial intelligence make it possible for machines alone to do much thinking or problem solving of military significance. That would leave, say, five years to develop man-computer symbiosis and 15 years to use it. The 15 may be 10 or 500, but those years should be intellectually the most creative and exciting in the history of mankind.

2 Aims of Man-Computer Symbiosis

Present-day computers are designed primarily to solve preformulated problems or to process data according to predetermined procedures. The course of the computation may be conditional upon results obtained during the computation, but all the alternatives must be foreseen in advance. (If an unforeseen alternative arises, the whole process comes to a halt and awaits the necessary extension of the program.) The requirement for preformulation or predetermination is sometimes no great disadvantage. It is often said that programming for a computing machine forces one to think clearly, that it disciplines the thought process. If the user can think his problem through in advance, symbiotic association with a computing machine is not necessary.

However, many problems that can be thought through in advance are very difficult to think through in advance. They would be easier to solve, and they could be solved faster, through an intuitively guided trial-and-error procedure in which the computer cooperated, turning up flaws in the reasoning or revealing unexpected turns in the solution. Other problems simply cannot be formulated without computing-machine aid. Poincare anticipated the frustration of an important group of would-be computer users when he said, “The question is not, ‘What is the answer?’ The question is, ‘What is the question?’” One of the main aims of man-computer symbiosis is to bring the computing machine effectively into the formulative parts of technical problems.

The other main aim is closely related. It is to bring computing machines effectively into processes of thinking that must go on in “real time,” time that moves too fast to permit using computers in conventional ways. Imagine trying, for example, to direct a battle with the aid of a computer on such a schedule as this. You formulate your problem today. Tomorrow you spend with a programmer. Next week the computer devotes 5 minutes to assembling your program and 47 seconds to calculating the answer to your problem. You get a sheet of paper 20 feet long, full of numbers that, instead of providing a final solution, only suggest a tactic that should be explored by simulation. Obviously, the battle would be over before the second step in its planning was begun. To think in interaction with a computer in the same way that you think with a colleague whose competence supplements your own will require much tighter coupling between man and machine than is suggested by the example and than is possible today.

3 Need for Computer Participation in Formulative and Real-Time Thinking

The preceding paragraphs tacitly made the assumption that, if they could be introduced effectively into the thought process, the functions that can be performed by data-processing machines would improve or facilitate thinking and problem solving in an important way. That assumption may require justification.

3.1 A Preliminary and Informal Time-and-Motion Analysis of Technical Thinking

Despite the fact that there is a voluminous literature on thinking and problem solving, including intensive case-history studies of the process of invention, I could find nothing comparable to a time-and-motion-study analysis of the mental work of a person engaged in a scientific or technical enterprise. In the spring and summer of 1957, therefore, I tried to keep track of what one moderately technical person actually did during the hours he regarded as devoted to work. Although I was aware of the inadequacy of the sampling, I served as my own subject.

It soon became apparent that the main thing I did was to keep records, and the project would have become an infinite regress if the keeping of records had been carried through in the detail envisaged in the initial plan. It was not. Nevertheless, I obtained a picture of my activities that gave me pause. Perhaps my spectrum is not typical–I hope it is not, but I fear it is.

About 85 per cent of my “thinking” time was spent getting into a position to think, to make a decision, to learn something I needed to know. Much more time went into finding or obtaining information than into digesting it. Hours went into the plotting of graphs, and other hours into instructing an assistant how to plot. When the graphs were finished, the relations were obvious at once, but the plotting had to be done in order to make them so. At one point, it was necessary to compare six experimental determinations of a function relating speech-intelligibility to speech-to-noise ratio. No two experimenters had used the same definition or measure of speech-to-noise ratio. Several hours of calculating were required to get the data into comparable form. When they were in comparable form, it took only a few seconds to determine what I needed to know.

Throughout the period I examined, in short, my “thinking” time was devoted mainly to activities that were essentially clerical or mechanical: searching, calculating, plotting, transforming, determining the logical or dynamic consequences of a set of assumptions or hypotheses, preparing the way for a decision or an insight. Moreover, my choices of what to attempt and what not to attempt were determined to an embarrassingly great extent by considerations of clerical feasibility, not intellectual capability.

The main suggestion conveyed by the findings just described is that the operations that fill most of the time allegedly devoted to technical thinking are operations that can be performed more effectively by machines than by men. Severe problems are posed by the fact that these operations have to be performed upon diverse variables and in unforeseen and continually changing sequences. If those problems can be solved in such a way as to create a symbiotic relation between a man and a fast information-retrieval and data-processing machine, however, it seems evident that the cooperative interaction would greatly improve the thinking process.

It may be appropriate to acknowledge, at this point, that we are using the term “computer” to cover a wide class of calculating, data-processing, and information-storage-and-retrieval machines. The capabilities of machines in this class are increasing almost daily. It is therefore hazardous to make general statements about capabilities of the class. Perhaps it is equally hazardous to make general statements about the capabilities of men. Nevertheless, certain genotypic differences in capability between men and computers do stand out, and they have a bearing on the nature of possible man-computer symbiosis and the potential value of achieving it.

As has been said in various ways, men are noisy, narrow-band devices, but their nervous systems have very many parallel and simultaneously active channels. Relative to men, computing machines are very fast and very accurate, but they are constrained to perform only one or a few elementary operations at a time. Men are flexible, capable of “programming themselves contingently” on the basis of newly received information. Computing machines are single-minded, constrained by their ” pre-programming.” Men naturally speak redundant languages organized around unitary objects and coherent actions and employing 20 to 60 elementary symbols. Computers “naturally” speak nonredundant languages, usually with only two elementary symbols and no inherent appreciation either of unitary objects or of coherent actions.

To be rigorously correct, those characterizations would have to include many qualifiers. Nevertheless, the picture of dissimilarity (and therefore p0tential supplementation) that they present is essentially valid. Computing machines can do readily, well, and rapidly many things that are difficult or impossible for man, and men can do readily and well, though not rapidly, many things that are difficult or impossible for computers. That suggests that a symbiotic cooperation, if successful in integrating the positive characteristics of men and computers, would be of great value. The differences in speed and in language, of course, pose difficulties that must be overcome.

4 Separable Functions of Men and Computers in the Anticipated Symbiotic Association

It seems likely that the contributions of human operators and equipment will blend together so completely in many operations that it will be difficult to separate them neatly in analysis. That would be the case it; in gathering data on which to base a decision, for example, both the man and the computer came up with relevant precedents from experience and if the computer then suggested a course of action that agreed with the man’s intuitive judgment. (In theorem-proving programs, computers find precedents in experience, and in the SAGE System, they suggest courses of action. The foregoing is not a far-fetched example. ) In other operations, however, the contributions of men and equipment will be to some extent separable.

Men will set the goals and supply the motivations, of course, at least in the early years. They will formulate hypotheses. They will ask questions. They will think of mechanisms, procedures, and models. They will remember that such-and-such a person did some possibly relevant work on a topic of interest back in 1947, or at any rate shortly after World War II, and they will have an idea in what journals it might have been published. In general, they will make approximate and fallible, but leading, contributions, and they will define criteria and serve as evaluators, judging the contributions of the equipment and guiding the general line of thought.

In addition, men will handle the very-low-probability situations when such situations do actually arise. (In current man-machine systems, that is one of the human operator’s most important functions. The sum of the probabilities of very-low-probability alternatives is often much too large to neglect. ) Men will fill in the gaps, either in the problem solution or in the computer program, when the computer has no mode or routine that is applicable in a particular circumstance.

The information-processing equipment, for its part, will convert hypotheses into testable models and then test the models against data (which the human operator may designate roughly and identify as relevant when the computer presents them for his approval). The equipment will answer questions. It will simulate the mechanisms and models, carry out the procedures, and display the results to the operator. It will transform data, plot graphs (“cutting the cake” in whatever way the human operator specifies, or in several alternative ways if the human operator is not sure what he wants). The equipment will interpolate, extrapolate, and transform. It will convert static equations or logical statements into dynamic models so the human operator can examine their behavior. In general, it will carry out the routinizable, clerical operations that fill the intervals between decisions.

In addition, the computer will serve as a statistical-inference, decision-theory, or game-theory machine to make elementary evaluations of suggested courses of action whenever there is enough basis to support a formal statistical analysis. Finally, it will do as much diagnosis, pattern-matching, and relevance-recognizing as it profitably can, but it will accept a clearly secondary status in those areas.

5 Prerequisites for Realization of Man-Computer Symbiosis

The data-processing equipment tacitly postulated in the preceding section is not available. The computer programs have not been written. There are in fact several hurdles that stand between the nonsymbiotic present and the anticipated symbiotic future. Let us examine some of them to see more clearly what is needed and what the chances are of achieving it.

5.1 Speed Mismatch Between Men and Computers

Any present-day large-scale computer is too fast and too costly for real-time cooperative thinking with one man. Clearly, for the sake of efficiency and economy, the computer must divide its time among many users. Timesharing systems are currently under active development. There are even arrangements to keep users from “clobbering” anything but their own personal programs.

It seems reasonable to envision, for a time 10 or 15 years hence, a “thinking center” that will incorporate the functions of present-day libraries together with anticipated advances in information storage and retrieval and the symbiotic functions suggested earlier in this paper. The picture readily enlarges itself into a network of such centers, connected to one another by wide-band communication lines and to individual users by leased-wire services. In such a system, the speed of the computers would be balanced, and the cost of the gigantic memories and the sophisticated programs would be divided by the number of users.

5.2 Memory Hardware Requirements

When we start to think of storing any appreciable fraction of a technical literature in computer memory, we run into billions of bits and, unless things change markedly, billions of dollars.

The first thing to face is that we shall not store all the technical and scientific papers in computer memory. We may store the parts that can be summarized most succinctly-the quantitative parts and the reference citations-but not the whole. Books are among the most beautifully engineered, and human-engineered, components in existence, and they will continue to be functionally important within the context of man-computer symbiosis. (Hopefully, the computer will expedite the finding, delivering, and returning of books.)

The second point is that a very important section of memory will be permanent: part indelible memory and part published memory. The computer will be able to write once into indelible memory, and then read back indefinitely, but the computer will not be able to erase indelible memory. (It may also over-write, turning all the 0’s into l’s, as though marking over what was written earlier.) Published memory will be “read-only” memory. It will be introduced into the computer already structured. The computer will be able to refer to it repeatedly, but not to change it. These types of memory will become more and more important as computers grow larger. They can be made more compact than core, thin-film, or even tape memory, and they will be much less expensive. The main engineering problems will concern selection circuitry.

In so far as other aspects of memory requirement are concerned, we may count upon the continuing development of ordinary scientific and business computing machines There is some prospect that memory elements will become as fast as processing (logic) elements. That development would have a revolutionary effect upon the design of computers.

5.3 Memory Organization Requirements

Implicit in the idea of man-computer symbiosis are the requirements that information be retrievable both by name and by pattern and that it be accessible through procedure much faster than serial search. At least half of the problem of memory organization appears to reside in the storage procedure. Most of the remainder seems to be wrapped up in the problem of pattern recognition within the storage mechanism or medium. Detailed discussion of these problems is beyond the present scope. However, a brief outline of one promising idea, “trie memory,” may serve to indicate the general nature of anticipated developments.

Trie memory is so called by its originator, Fredkin [10], because it is designed to facilitate retrieval of information and because the branching storage structure, when developed, resembles a tree. Most common memory systems store functions of arguments at locations designated by the arguments. (In one sense, they do not store the arguments at all. In another and more realistic sense, they store all the possible arguments in the framework structure of the memory.) The trie memory system, on the other hand, stores both the functions and the arguments. The argument is introduced into the memory first, one character at a time, starting at a standard initial register. Each argument register has one cell for each character of the ensemble (e.g., two for information encoded in binary form) and each character cell has within it storage space for the address of the next register. The argument is stored by writing a series of addresses, each one of which tells where to find the next. At the end of the argument is a special “end-of-argument” marker. Then follow directions to the function, which is stored in one or another of several ways, either further trie structure or “list structure” often being most effective.

The trie memory scheme is inefficient for small memories, but it becomes increasingly efficient in using available storage space as memory size increases. The attractive features of the scheme are these: 1) The retrieval process is extremely simple. Given the argument, enter the standard initial register with the first character, and pick up the address of the second. Then go to the second register, and pick up the address of the third, etc. 2) If two arguments have initial characters in common, they use the same storage space for those characters. 3) The lengths of the arguments need not be the same, and need not be specified in advance. 4) No room in storage is reserved for or used by any argument until it is actually stored. The trie structure is created as the items are introduced into the memory. 5) A function can be used as an argument for another function, and that function as an argument for the next. Thus, for example, by entering with the argument, “matrix multiplication,” one might retrieve the entire program for performing a matrix multiplication on the computer. 6) By examining the storage at a given level, one can determine what thus-far similar items have been stored. For example, if there is no citation for Egan, J. P., it is but a step or two backward to pick up the trail of Egan, James … .

The properties just described do not include all the desired ones, but they bring computer storage into resonance with human operators and their predilection to designate things by naming or pointing.

5.4 The Language Problem

The basic dissimilarity between human languages and computer languages may be the most serious obstacle to true symbiosis. It is reassuring, however, to note what great strides have already been made, through interpretive programs and particularly through assembly or compiling programs such as FORTRAN, to adapt computers to human language forms. The “Information Processing Language” of Shaw, Newell, Simon, and Ellis [24] represents another line of rapprochement. And, in ALGOL and related systems, men are proving their flexibility by adopting standard formulas of representation and expression that are readily translatable into machine language.

For the purposes of real-time cooperation between men and computers, it will be necessary, however, to make use of an additional and rather different principle of communication and control. The idea may be highlighted by comparing instructions ordinarily addressed to intelligent human beings with instructions ordinarily used with computers. The latter specify precisely the individual steps to take and the sequence in which to take them. The former present or imply something about incentive or motivation, and they supply a criterion by which the human executor of the instructions will know when he has accomplished his task. In short: instructions directed to computers specify courses; instructions-directed to human beings specify goals.

Men appear to think more naturally and easily in terms of goals than in terms of courses. True, they usually know something about directions in which to travel or lines along which to work, but few start out with precisely formulated itineraries. Who, for example, would depart from Boston for Los Angeles with a detailed specification of the route? Instead, to paraphrase Wiener, men bound for Los Angeles try continually to decrease the amount by which they are not yet in the smog.

Computer instruction through specification of goals is being approached along two paths. The first involves problem-solving, hill-climbing, self-organizing programs. The second involves real-time concatenation of preprogrammed segments and closed subroutines which the human operator can designate and call into action simply by name.

Along the first of these paths, there has been promising exploratory work. It is clear that, working within the loose constraints of predetermined strategies, computers will in due course be able to devise and simplify their own procedures for achieving stated goals. Thus far, the achievements have not been substantively important; they have constituted only “demonstration in principle.” Nevertheless, the implications are far-reaching.

Although the second path is simpler and apparently capable of earlier realization, it has been relatively neglected. Fredkin’s trie memory provides a promising paradigm. We may in due course see a serious effort to develop computer programs that can be connected together like the words and phrases of speech to do whatever computation or control is required at the moment. The consideration that holds back such an effort, apparently, is that the effort would produce nothing that would be of great value in the context of existing computers. It would be unrewarding to develop the language before there are any computing machines capable of responding meaningfully to it.

5.5 Input and Output Equipment

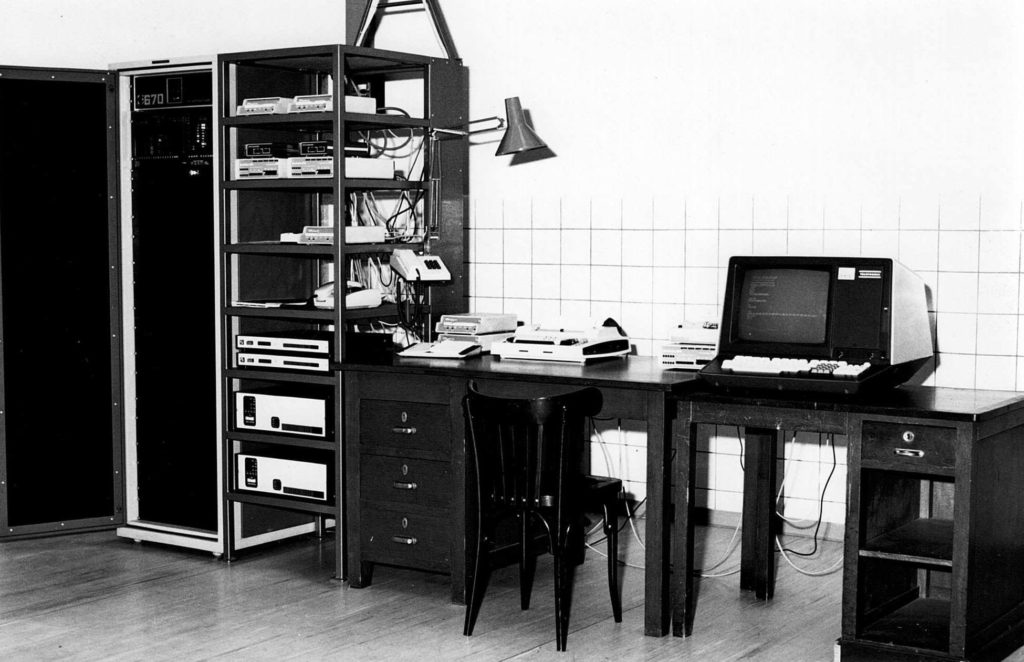

The department of data processing that seems least advanced, in so far as the requirements of man-computer symbiosis are concerned, is the one that deals with input and output equipment or, as it is seen from the human operator’s point of view, displays and controls. Immediately after saying that, it is essential to make qualifying comments, because the engineering of equipment for high-speed introduction and extraction of information has been excellent, and because some very sophisticated display and control techniques have been developed in such research laboratories as the Lincoln Laboratory. By and large, in generally available computers, however, there is almost no provision for any more effective, immediate man-machine communication than can be achieved with an electric typewriter.

Displays seem to be in a somewhat better state than controls. Many computers plot graphs on oscilloscope screens, and a few take advantage of the remarkable capabilities, graphical and symbolic, of the charactron display tube. Nowhere, to my knowledge, however, is there anything approaching the flexibility and convenience of the pencil and doodle pad or the chalk and blackboard used by men in technical discussion.

1) Desk-Surface Display and Control: Certainly, for effective man-computer interaction, it will be necessary for the man and the computer to draw graphs and pictures and to write notes and equations to each other on the same display surface. The man should be able to present a function to the computer, in a rough but rapid fashion, by drawing a graph. The computer should read the man’s writing, perhaps on the condition that it be in clear block capitals, and it should immediately post, at the location of each hand-drawn symbol, the corresponding character as interpreted and put into precise type-face. With such an input-output device, the operator would quickly learn to write or print in a manner legible to the machine. He could compose instructions and subroutines, set them into proper format, and check them over before introducing them finally into the computer’s main memory. He could even define new symbols, as Gilmore and Savell [14] have done at the Lincoln Laboratory, and present them directly to the computer. He could sketch out the format of a table roughly and let the computer shape it up with precision. He could correct the computer’s data, instruct the machine via flow diagrams, and in general interact with it very much as he would with another engineer, except that the “other engineer” would be a precise draftsman, a lightning calculator, a mnemonic wizard, and many other valuable partners all in one.

2) Computer-Posted Wall Display: In some technological systems, several men share responsibility for controlling vehicles whose behaviors interact. Some information must be presented simultaneously to all the men, preferably on a common grid, to coordinate their actions. Other information is of relevance only to one or two operators. There would be only a confusion of uninterpretable clutter if all the information were presented on one display to all of them. The information must be posted by a computer, since manual plotting is too slow to keep it up to date.

The problem just outlined is even now a critical one, and it seems certain to become more and more critical as time goes by. Several designers are convinced that displays with the desired characteristics can be constructed with the aid of flashing lights and time-sharing viewing screens based on the light-valve principle.

The large display should be supplemented, according to most of those who have thought about the problem, by individual display-control units. The latter would permit the operators to modify the wall display without leaving their locations. For some purposes, it would be desirable for the operators to be able to communicate with the computer through the supplementary displays and perhaps even through the wall display. At least one scheme for providing such communication seems feasible.

The large wall display and its associated system are relevant, of course, to symbiotic cooperation between a computer and a team of men. Laboratory experiments have indicated repeatedly that informal, parallel arrangements of operators, coordinating their activities through reference to a large situation display, have important advantages over the arrangement, more widely used, that locates the operators at individual consoles and attempts to correlate their actions through the agency of a computer. This is one of several operator-team problems in need of careful study.

3) Automatic Speech Production and Recognition: How desirable and how feasible is speech communication between human operators and computing machines? That compound question is asked whenever sophisticated data-processing systems are discussed. Engineers who work and live with computers take a conservative attitude toward the desirability. Engineers who have had experience in the field of automatic speech recognition take a conservative attitude toward the feasibility. Yet there is continuing interest in the idea of talking with computing machines. In large part, the interest stems from realization that one can hardly take a military commander or a corporation president away from his work to teach him to type. If computing machines are ever to be used directly by top-level decision makers, it may be worthwhile to provide communication via the most natural means, even at considerable cost.

Preliminary analysis of his problems and time scales suggests that a corporation president would be interested in a symbiotic association with a computer only as an avocation. Business situations usually move slowly enough that there is time for briefings and conferences. It seems reasonable, therefore, for computer specialists to be the ones who interact directly with computers in business offices.

The military commander, on the other hand, faces a greater probability of having to make critical decisions in short intervals of time. It is easy to overdramatize the notion of the ten-minute war, but it would be dangerous to count on having more than ten minutes in which to make a critical decision. As military system ground environments and control centers grow in capability and complexity, therefore, a real requirement for automatic speech production and recognition in computers seems likely to develop. Certainly, if the equipment were already developed, reliable, and available, it would be used.

In so far as feasibility is concerned, speech production poses less severe problems of a technical nature than does automatic recognition of speech sounds. A commercial electronic digital voltmeter now reads aloud its indications, digit by digit. For eight or ten years, at the Bell Telephone Laboratories, the Royal Institute of Technology (Stockholm), the Signals Research and Development Establishment (Christchurch), the Haskins Laboratory, and the Massachusetts Institute of Technology, Dunn [6], Fant [7], Lawrence [15], Cooper [3], Stevens [26], and their co-workers, have demonstrated successive generations of intelligible automatic talkers. Recent work at the Haskins Laboratory has led to the development of a digital code, suitable for use by computing machines, that makes an automatic voice utter intelligible connected discourse [16].

The feasibility of automatic speech recognition depends heavily upon the size of the vocabulary of words to be recognized and upon the diversity of talkers and accents with which it must work. Ninety-eight per cent correct recognition of naturally spoken decimal digits was demonstrated several years ago at the Bell Telephone Laboratories and at the Lincoln Laboratory [4], [9]. To go a step up the scale of vocabulary size, we may say that an automatic recognizer of clearly spoken alpha-numerical characters can almost surely be developed now on the basis of existing knowledge. Since untrained operators can read at least as rapidly as trained ones can type, such a device would be a convenient tool in almost any computer installation.

For real-time interaction on a truly symbiotic level, however, a vocabulary of about 2000 words, e.g., 1000 words of something like basic English and 1000 technical terms, would probably be required. That constitutes a challenging problem. In the consensus of acousticians and linguists, construction of a recognizer of 2000 words cannot be accomplished now. However, there are several organizations that would happily undertake to develop an automatic recognize for such a vocabulary on a five-year basis. They would stipulate that the speech be clear speech, dictation style, without unusual accent.

Although detailed discussion of techniques of automatic speech recognition is beyond the present scope, it is fitting to note that computing machines are playing a dominant role in the development of automatic speech recognizers. They have contributed the impetus that accounts for the present optimism, or rather for the optimism presently found in some quarters. Two or three years ago, it appeared that automatic recognition of sizeable vocabularies would not be achieved for ten or fifteen years; that it would have to await much further, gradual accumulation of knowledge of acoustic, phonetic, linguistic, and psychological processes in speech communication. Now, however, many see a prospect of accelerating the acquisition of that knowledge with the aid of computer processing of speech signals, and not a few workers have the feeling that sophisticated computer programs will be able to perform well as speech-pattern recognizes even without the aid of much substantive knowledge of speech signals and processes. Putting those two considerations together brings the estimate of the time required to achieve practically significant speech recognition down to perhaps five years, the five years just mentioned.

References

[1] A. Bernstein and M. deV. Roberts, “Computer versus chess-player,” Scientific American, vol. 198, pp. 96-98; June, 1958.

[2] W. W. Bledsoe and I. Browning, “Pattern Recognition and Reading by Machine,” presented at the Eastern Joint Computer Conf, Boston, Mass., December, 1959.

[3] F. S. Cooper, et al., “Some experiments on the perception of synthetic speech sounds,” J. Acoust Soc. Amer., vol.24, pp.597-606; November, 1952.

[4] K. H. Davis, R. Biddulph, and S. Balashek, “Automatic recognition of spoken digits,” in W. Jackson, Communication Theory, Butterworths Scientific Publications, London, Eng., pp. 433-441; 1953.

[5] G. P. Dinneen, “Programming pattern recognition,” Proc. WJCC, pp. 94-100; March, 1955.

[6] H. K. Dunn, “The calculation of vowel resonances, and an electrical vocal tract,” J. Acoust Soc. Amer., vol. 22, pp.740-753; November, 1950.

[7] G. Fant, “On the Acoustics of Speech,” paper presented at the Third Internatl. Congress on Acoustics, Stuttgart, Ger.; September, 1959.

[8] B. G. Farley and W. A. Clark, “Simulation of self-organizing systems by digital computers.” IRE Trans. on Information Theory, vol. IT-4, pp.76-84; September, 1954

[9] J. W. Forgie and C. D. Forgie, “Results obtained from a vowel recognition computer program,” J. Acoust Soc. Amer., vol. 31, pp. 1480-1489; November, 1959

[10] E. Fredkin, “Trie memory,” Communications of the ACM, Sept. 1960, pp. 490-499

[11] R. M. Friedberg, “A learning machine: Part I,” IBM J. Res. & Dev., vol.2, pp.2-13; January, 1958.

[12] H. Gelernter, “Realization of a Geometry Theorem Proving Machine.” Unesco, NS, ICIP, 1.6.6, Internatl. Conf. on Information Processing, Paris, France; June, 1959.

[13] P. C. Gilmore, “A Program for the Production of Proofs for Theorems Derivable Within the First Order Predicate Calculus from Axioms,” Unesco, NS, ICIP, 1.6.14, Internatl. Conf. on Information Processing, Paris, France; June, 1959.

[14] J. T. Gilmore and R. E. Savell, “The Lincoln Writer,” Lincoln Laboratory, M. I. T., Lexington, Mass., Rept. 51-8; October, 1959.

[15] W. Lawrence, et al., “Methods and Purposes of Speech Synthesis,” Signals Res. and Dev. Estab., Ministry of Supply, Christchurch, Hants, England, Rept. 56/1457; March, 1956.

[16] A. M. Liberman, F. Ingemann, L. Lisker, P. Delattre, and F. S. Cooper, “Minimal rules for synthesizing speech,” J. Acoust Soc. Amer., vol. 31, pp. 1490-1499; November, 1959.

[17] A. Newell, “The chess machine: an example of dealing with a complex task by adaptation,” Proc. WJCC, pp. 101-108; March, 1955.

[18] A. Newell and J. C. Shaw, “Programming the logic theory machine.” Proc. WJCC, pp. 230-240; March, 1957.

[19] A. Newell, J. C. Shaw, and H. A. Simon, “Chess-playing programs and the problem of complexity,” IBM J. Res & Dev., vol.2, pp. 320-33.5; October, 1958.

[20] A. Newell, H. A. Simon, and J. C. Shaw, “Report on a general problem-solving program,” Unesco, NS, ICIP, 1.6.8, Internatl. Conf. on Information Processing, Paris, France; June, 1959.

[21] J. D. North, “The rational behavior of mechanically extended man”, Boulton Paul Aircraft Ltd., Wolverhampton, Eng.; September, 1954.

[22] 0. G. Selfridge, “Pandemonium, a paradigm for learning,” Proc. Symp. Mechanisation of Thought Processes, Natl. Physical Lab., Teddington, Eng.; November, 1958.

[23] C. E. Shannon, “Programming a computer for playing chess,” Phil. Mag., vol.41, pp.256-75; March, 1950.

[24] J. C. Shaw, A. Newell, H. A. Simon, and T. O. Ellis, “A command structure for complex information processing,” Proc. WJCC, pp. 119-128; May, 1958.

[25] H. Sherman, “A Quasi-Topological Method for Recognition of Line Patterns,” Unesco, NS, ICIP, H.L.5, Internatl. Conf. on Information Processing, Paris, France; June, 1959

[26] K. N. Stevens, S. Kasowski, and C. G. Fant, “Electric analog of the vocal tract,” J. Acoust. Soc. Amer., vol. 25, pp. 734-742; July, 1953.

[27] Webster’s New International Dictionary, 2nd e., G. and C. Merriam Co., Springfield, Mass., p. 2555; 1958.